With Decart’s New Model, Real-time Video Transformation Just Got Real

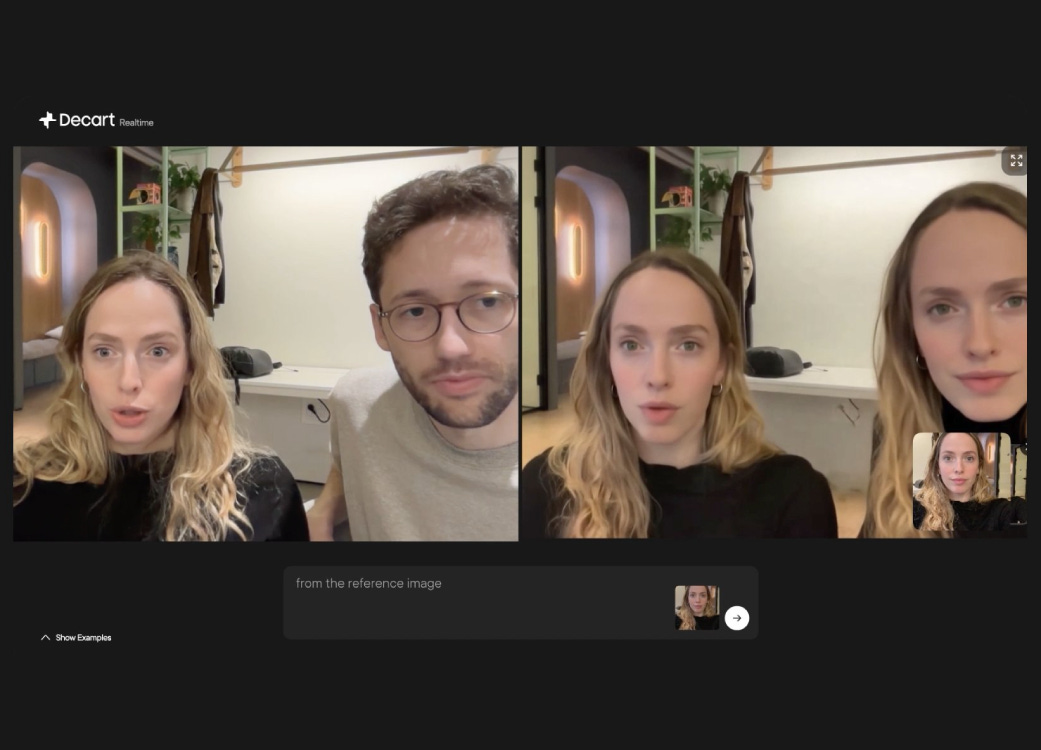

Real-time video transformation in livestreaming is finally a thing following the launch of Decart’s new flagship model Lucy 2. More than just a video generation model, Lucy 2 is a revolutionary new “world model” that’s designed to understand and simulate real-world dynamics, including physical interactions and spatial properties, and apply it to video output.

It promises to be a game changer for AI video because it creates new possibilities in terms of its ability to run continuously and edit live video feeds on the fly, without any buffering. This means that post-processing is no longer required to generate realistic, high-quality video content with AI systems.

Lucy 2 can do this because it’s designed to generate video in a single, ongoing stream from the moment the camera is switched on. Content is generated on a continuous, frame-by-frame basis indefinitely, with each frame preserving full-body movement, physical presence and timing from one to the next, ensuring unparalleled continuity. The result is higher quality content with much greater accuracy, free from the errors that have bedeviled AI-generated video until now.

Generative Video as a Living System

Decart technical expert Metar Megiora said Lucy 2 was designed for creators, livestreamers and other professionals that want to make visual transformation occur in real time. For instance, social media influencers livestreaming on TikTok or YouTube, or even developer teams building interactive entertainment content and other applications, such as real-time video communication.

Generative AI has long held tons of promise for livestreamers and video-based applications, but the vast majority of users are generally left with a bad taste in their mouth due to the less-than-optimal quality of the content generated, or the need for post-production processing by models like OpenAI’s Sora or Google’s Veo.

Megiora said the problem is that these models’ outputs require endless re-prompting or manual editing before they begin to approach something that begins to resemble a “real” video. “Most well-known video models today are designed to generate clips: they take an input, process it in batches, and output a closed video segment,” Megiora explained.

“Even when the result appears ‘live,’ it is in practice an offline process that includes buffering, chunking, and sometimes post-processing. These models do not maintain continuous state, so any change in motion, prompt, or identity requires recomputation, which can take several minutes of waiting.”

It’s because of this poor experience that most creators either avoid using generative video entirely in their livestreams, or employ heavy workarounds that ruin the immersion or limit what’s possible with live video.

Lucy 2 turns the concept of AI video generation on its head, Megiora said. Rather than generating clips or segments, it outputs a continuous, uninterrupted stream of AI-generated video that preserves all of the motion, posture and timing in real-time while allowing identity and appearance to change on the fly.

Embed video

“Lucy 2 is …designed from the ground up to function as a live system: it operates continuously and autoregressively, generating frame after frame with no batching or buffering,” he said. “Each frame is produced as a direct continuation of the previous one, while preserving full context and state—including motion, identity, lighting, and physical coherence.”

Megiora said the original Lucy model laid the foundations for Lucy 2, introducing live generation capabilities, but it had clear limitations in terms of its stability, identity control and its contextual understanding. Because of these issues, he said it struggled to maintain consistent character structure and visual fidelity when generating clips longer than about 30 seconds.

With Lucy 2, Megiora said users can now achieve “state-of-the-art” video quality through a real-time model. He explained that it now has a much deeper understanding of physics, motion and scene structure, resulting in exponentially superior outputs.

“It enables live identity control via reference images streamed in real-time, without pre-locking the character, and it maintains visual consistency even over long runs,” he said. “At the same time, we achieved both higher quality and lower latency, a combination that is considered particularly difficult to accomplish from a research perspective.”

Promising Real-time Experiences

The model excels in various applications. For example, influencers can enhance TikTok livestreams by applying different thematic filters or dynamically altering their appearance, or the backdrop, in real time via simple prompts. For virtual meetings, users can create customizable avatars with much greater realism, and in educational scenarios, a history teacher can simulate a replica of the Coliseum packed with spectators as a backdrop for a lesson about Roman history.

“The primary use case is streaming and live experiences, rather than closed, pre-generated outputs,” Megiora said. “This includes streamers appearing as characters in real time, live performances where identity and style change dynamically, interactive experiences where video responds to the user, and everyday communication scenarios in which video itself becomes a dynamic, rather than static layer.”

Megiora said Decart is not trying to monetize Lucy 2 at this stage, and has simply focused on making the model accessible to as many creators as possible by deploying it on streaming platforms such as TikTok Live, YouTube Live and Kick. The intention is to just sit back and see how its adoption grows organically within the livestreaming culture, and the progress so far has been extremely encouraging.

“Once creators were exposed to Lucy 2’s capabilities, especially the ability to appear as a character in real time with no latency and without harming motion or presence, they began using it naturally in live broadcasts,” Megiora said.