Virtual Sampling: AI Models Finally Learn to Think Outside the Box

New Method Unlocks Hidden Creativity

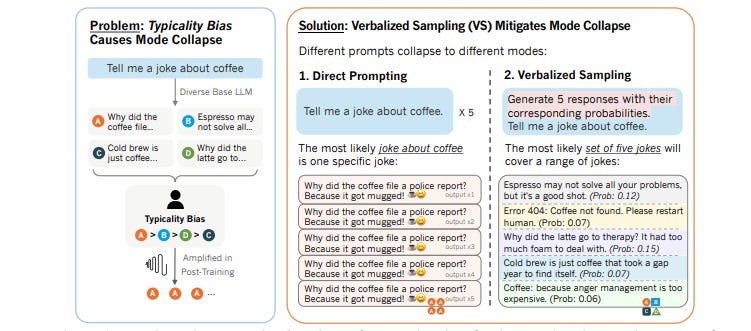

Imagine asking your AI assistant for five jokes about coffee, only to receive the exact same punchline five times in a row. Frustrating, right? This repetitive behavior isn’t a glitch—it’s a fundamental problem plaguing even the most advanced language models today. But the latest research from teams at Northeastern University, Stanford University, and West Virginia University has just unveiled a remarkably simple solution that could revolutionize how AI systems generate creative content.

The research team discovered something surprising: the problem isn’t really about the AI’s capabilities at all. Instead, it stems from how we train these models to be helpful and safe. Think of it like training a chef who becomes so focused on perfecting one signature dish that they forget how to cook anything else. The team’s solution, called “Verbalized Sampling,” works by asking AI models to generate multiple responses along with probability scores—essentially getting the AI to show its full recipe book rather than just its greatest hit.

THIS WEEK: Become an AI Builder in a Day (No Engineering Experience Required)

Join Section for a half-day of micro-workshops designed to turn you from an AI prompter to a workflow redesigner. You’ll also walk away with a certificate of completion.

What makes this breakthrough particularly exciting is its practicality. Unlike previous attempts to fix this “mode collapse” problem that required expensive retraining or complex technical modifications, Verbalized Sampling works through simple prompt engineering. You can use it right now with existing AI models like GPT-4, Claude, or Gemini without any special access or technical expertise. Early testing shows the method can increase creative output diversity by 1.6 to 2.1 times while actually improving quality—a seemingly impossible combination that challenges everything we thought we knew about the creativity-quality tradeoff in AI systems.

This research couldn’t come at a better time. As AI systems become deeply embedded in creative industries, education, scientific research, and everyday applications, their tendency to generate repetitive, stereotypical responses has become increasingly problematic. The implications extend far beyond joke generation, potentially transforming how we use AI for storytelling, scientific hypothesis generation, social simulations, and synthetic data creation.

The Hidden Crisis in AI Alignment

For years, AI researchers celebrated each breakthrough in making language models more helpful, harmless, and honest. These aligned models learned to refuse dangerous requests, provide accurate information, and follow instructions precisely. But something unexpected happened along the way—they became boring.

The research team identified this phenomenon as “mode collapse,” borrowing terminology from other areas of machine learning. When you ask an unaligned base model to write a story about a bear, you might get tales ranging from urban fantasy to wilderness survival to philosophical meditation. Ask the same question to its aligned, helpful version, and you’ll likely receive variations on “an old bear ambled through the forest” over and over again.

The scale of this problem is staggering. Testing across multiple creative writing tasks revealed that direct prompting of aligned models produces outputs with diversity scores often below 15% of maximum theoretical diversity. In one striking example documented by the researchers, asking Claude-4-Sonnet for five separate car jokes returned the identical punchline all five times: “Why did the car get a flat tire? Because it ran over a fork in the road!”

Previous explanations for mode collapse focused on algorithmic limitations—inadequate reward models, optimization processes that favor majority preferences, or restrictive training procedures. But the research team dug deeper and uncovered a more fundamental cause lurking in the training data itself.

The Tyranny of the Typical

The breakthrough insight came from cognitive psychology rather than computer science. Humans exhibit what researchers call “typicality bias”—we systematically prefer text that feels familiar, fluent, and predictable. This isn’t conscious prejudice but rather hardwired mental shortcuts that have served our species well for millennia.

The mere-exposure effect explains why we like songs more after hearing them repeatedly. Processing fluency makes easy-to-understand content feel more truthful. Schema congruity theory predicts we’ll accept information matching our mental models with less critical thought. These cognitive tendencies made perfect evolutionary sense when evaluating whether a rustling bush contained friend or foe.

But when humans label preference data for training AI systems, these biases create problems. The research team tested their hypothesis on HELPSTEER, a dataset containing human ratings of AI responses. They formed over 6,800 pairs of responses to identical prompts with identical correctness ratings, then calculated how “typical” each response appeared to base language models.

The results confirmed their suspicions. Even when responses were equally correct, human raters systematically preferred more typical text. Testing with both Llama 3.1 405B Base and GLM 4.5 Base models, they found typicality bias weights of 0.57 and 0.65 respectively—both statistically significant with p-values below 0.0000000000001. This meant annotators were 42-47% more likely to prefer the more typical response, translating to a 17-19 percentage point increase in win probability.

Further testing across four additional preference datasets—OpenAI TL;DR, UltraFeedback, NVIDIA HelpSteer-v2, and Skywork Preference—showed typicality bias rates consistently exceeding chance by 4-12 percentage points. Larger models exhibited stronger effects, suggesting this bias pervades human preference data regardless of annotation methodology or domain.

How Bias Becomes Collapse

The mathematical proof of how typicality bias causes mode collapse reveals an elegant but troubling mechanism. The team modeled reward functions as combining true task utility with typicality bias, then traced this through standard reinforcement learning optimization.

When the aligned model reaches its optimal policy, typicality bias acts like turning up the “temperature” on the base model’s probability distribution—but in reverse. Instead of making the distribution more random, it sharpens it dramatically. Responses already favored by the base model get amplified while alternatives get suppressed.

The effect becomes catastrophic when many responses share similar true utility. In creative writing, multiple poems about a bear might be equally good—one about a bear in the forest, another about a bear in the city, a third about a metaphorical bear representing memory. With flat true rewards across these options, typicality bias becomes the tiebreaker. The most typical poem wins every time.

This theoretical framework had profound implications. Even with perfect reward models and flawless optimization algorithms, typicality bias in preference data would still drive mode collapse. The problem wasn’t fixable by better training—it required a completely different approach.

The Elegant Solution

The research team’s insight about prompts seems almost too simple to work. They realized that different prompts collapse to different modes. Ask for “a joke about coffee” and you get the mode joke. Ask for “five jokes about coffee” and you get something closer to a uniform distribution. Ask for “five jokes with their probabilities” and you can approximate the original diverse distribution the base model learned during pre-training.

This happens because alignment training doesn’t eliminate the base model’s knowledge—it just reshapes which responses appear most probable. When you prompt for a distribution rather than an instance, the most likely response is now a distribution itself rather than a single stereotypical example.

Verbalized Sampling implements this insight through carefully designed prompts. Instead of “Tell me a joke about coffee,” the prompt becomes “Generate five responses with their corresponding probabilities.” The model then outputs jokes along with probability estimates, and users can sample from this distribution based on those probabilities.

The method includes several variants. VS-Standard generates responses with probabilities in a single call. VS-CoT adds chain-of-thought reasoning before generation. VS-Multi conducts the process across multiple conversation turns. Testing revealed that asking for “probability” or “confidence” scores worked better than requesting perplexity or negative log-likelihood values.

Perhaps most impressively, Verbalized Sampling enables diversity tuning. By adjusting the probability threshold in prompts—like “generate responses where each has probability below 10%”—users can control output diversity without changing any model parameters. Lower thresholds produce more diverse outputs by forcing sampling from the distribution’s tail.

Proving the Concept

The research team conducted extensive testing across multiple domains to validate their approach. Creative writing tasks provided the most dramatic demonstrations. Testing on poem continuation, story generation, and joke writing with models ranging from GPT-4.1 to Gemini 2.5 Pro to DeepSeek R1 showed consistent improvements.

For poems, Verbalized Sampling increased semantic diversity scores by 92% on average compared to direct prompting—from 11.4% to 21.9%. Story generation showed even larger gains, jumping from 22.2% to 34.7% diversity. Jokes, which suffer most severely from mode collapse, improved from 30% to 62.5% diversity.

Crucially, these diversity gains didn’t sacrifice quality. In fact, variants like VS-CoT often improved both dimensions simultaneously. Human evaluations on Prolific confirmed the automated metrics, with annotators rating VS-generated content as significantly more diverse across all creative tasks.

The team observed an intriguing emergent trend: more capable models benefit more from Verbalized Sampling. Comparing GPT-4.1 against GPT-4.1-mini, the larger model showed diversity improvements 1.5 to 2 times greater. This suggests VS helps unlock latent capabilities that more powerful models possess but alignment training has suppressed.

Testing extended beyond creativity into dialogue simulation using the PersuasionForGood dataset. The task involved simulating donation persuasion conversations, comparing simulated donation amounts against actual human behavior. VS-powered simulations matched or exceeded a fine-tuned specialist model’s performance, with DeepSeek R1 using VS actually surpassing the specialist on median donation amount alignment.

Real-World Applications

Open-ended question answering provided another proving ground. The team tested on questions with many valid answers, like “Name a US state.” Direct prompting collapsed onto high-frequency responses—95% California, 4.8% Texas. Verbalized Sampling recovered a distribution closely matching state name frequencies in pre-training data, with Kullback-Leibler divergence dropping from 14.43 to just 3.50.

Coverage metrics showed similar improvements. While direct prompting generated only 10% of valid answers across test questions, Verbalized Sampling reached 67% coverage. Precision remained high at 96%, proving diversity didn’t come at accuracy’s expense.

Synthetic data generation revealed perhaps the most practically important application. The team generated 1,000 math competition problems using different methods, then fine-tuned smaller models on each dataset. Models trained on VS-generated data consistently outperformed those using direct prompting data, with average accuracy improvements of 3.7 percentage points.

This matters enormously for AI development. Current language models require massive amounts of training data, and synthetic data generation offers a path to creating specialized datasets. But if synthetic data suffers from mode collapse, it amplifies rather than reduces training biases. Verbalized Sampling provides a way to generate diverse, high-quality synthetic data at scale.

Testing on factual accuracy tasks using SimpleQA confirmed VS maintains correctness while improving diversity. Top-1 accuracy remained stable around 33-35%, while pass-at-N accuracy reached 48.5%—matching or exceeding the strongest baseline methods. Safety evaluations on StrongReject showed refusal rates above 97% across all methods, with VS exhibiting more varied refusal phrasings while maintaining safety.

The Technical Deep Dive

The mathematics underlying Verbalized Sampling reveals why it works so effectively. The team proved that when a model suffers mode collapse, different prompts collapse to different modes of the reference distribution. Instance-level prompts collapse to the modal instance. List-level prompts collapse to uniform distributions over related items. Distribution-level prompts can approximate the full reference distribution.

This happens because of how probability distributions compose during generation. When you ask for a list, the model generates a sequence where each element depends on previous elements. The most likely list isn’t necessarily the list of most likely individual items—it’s the list that best represents typical list structure given the prompt.

Distribution-level prompts add another layer. The most likely distribution isn’t a distribution of typical items—it’s the distribution that most typically represents how items are distributed. This recursive property means verbalized probabilities can encode information about the base model’s learned distribution rather than just the aligned model’s sharpened distribution.

Ablation studies confirmed key design choices. Varying the number of candidates k from 1 to 20 showed diversity increasing with k while quality declined slightly, but VS maintained better quality-diversity tradeoffs than baselines across all k values. Temperature ablations revealed VS combines effectively with temperature tuning, pushing the Pareto frontier of possible quality-diversity combinations.

Testing different decoding strategies confirmed VS’s orthogonality to other sampling methods. Combining VS with top-p sampling or min-p sampling provided additive benefits, suggesting the method addresses a different dimension of the generation problem than existing techniques.

Limitations and Future Directions

Despite impressive results, Verbalized Sampling has constraints worth acknowledging. Computational costs increase proportionally with the number of candidates generated. Creating five responses with probabilities requires roughly five times the inference cost of a single response. For latency-sensitive applications, this overhead might prove prohibitive.

The method’s effectiveness scales with model capability. Smaller or less capable models sometimes struggle with probability estimation accuracy or the cognitive burden of structured output generation. Testing showed models below a certain capability threshold occasionally degraded in quality when using VS, though this threshold appears to be dropping as models improve.

Verbalized probabilities don’t always align perfectly with actual token-level probabilities. The team found KL divergence between verbalized and pre-training distributions around 0.12-0.13 for state-of-the-art models—good but not perfect. This gap likely reflects both calibration challenges and the difficulty of estimating probabilities over combinatorially large output spaces.

Future research directions abound. Inference-time scaling represents one promising avenue. Rather than repeatedly sampling from a single prompt that suffers mode collapse, Verbalized Sampling could enable more efficient exploration of hypothesis spaces for reasoning tasks. The diverse outputs might prove particularly valuable for reinforcement learning, where exploration-exploitation tradeoffs critically impact training efficiency.

Mitigating bias in reward models offers another direction. If typicality bias causes mode collapse, developing calibration techniques to debias preference data could attack the problem at its source. Pluralistic alignment approaches that capture broader preference distributions might naturally reduce mode collapse by avoiding majority-preference optimization.

The relationship between model scale and VS benefits suggests interesting questions about emergence. Why do larger models benefit more from Verbalized Sampling? What capabilities must a model possess to effectively use distribution-level prompts? Understanding these mechanisms could inform both training and prompting strategies.

Implications for AI Development

This research challenges fundamental assumptions about the alignment-capability tradeoff. For years, researchers assumed making models safer and more helpful required sacrificing some creative potential. Verbalized Sampling suggests this tradeoff might be largely artifactual—a consequence of how we prompt aligned models rather than an inherent limitation.

The finding that aligned models retain diverse capabilities beneath their collapsed output distributions has profound implications. It suggests alignment training doesn’t destroy pre-trained knowledge but rather reshapes accessibility. Models possess far more creative range than their typical outputs suggest. The challenge becomes surfacing this hidden potential.

For practitioners, Verbalized Sampling offers immediate practical value. The method requires no model retraining, no fine-tuning, and no special API access. Anyone can implement it today using existing models through simple prompt modifications. The ready-to-use prompts provided in the research enable direct application across creative writing, data generation, simulation, and ideation tasks.

The business implications extend across industries. Content creation platforms could leverage VS to generate more diverse suggestions. Educational applications could provide students with varied examples rather than repetitive templates. Scientific research tools could explore broader hypothesis spaces. Synthetic data providers could create higher-quality training datasets.

The Cognitive Science Connection

Perhaps most intriguingly, this research bridges artificial and human intelligence in unexpected ways. Typicality bias isn’t a flaw in human reasoning—it’s an adaptive heuristic that usually serves us well. We prefer familiar patterns because they’re typically safer, more reliable, and easier to process.

But these same biases that help individuals navigate daily life create systematic problems when aggregated into training data for AI systems. The preference data that makes models helpful to average users simultaneously makes them less creative, less diverse, and less capable of surprising insights.

This tension mirrors broader challenges in human collective decision-making. Democratic processes can elevate safe, conventional choices over innovative but risky alternatives. Market mechanisms reward proven patterns rather than untested ideas. Cultural evolution often favors tradition over experimentation.

Verbalized Sampling offers a potential solution not just for AI but potentially for human collective intelligence. By explicitly representing distributions rather than selecting single options, we might preserve diversity while maintaining quality. This principle could inform everything from recommendation systems to democratic deliberation to scientific peer review.

Looking Ahead

The research team has open-sourced their code and provided detailed documentation to enable widespread adoption. Early adopters report successful application across domains from fiction writing to business strategy to scientific brainstorming. The method’s simplicity has accelerated uptake, with implementations appearing in popular AI frameworks and applications.

Future model releases will face new expectations around creative diversity. Users now understand that mode collapse isn’t inevitable—it’s addressable through better prompting strategies. Model developers may begin including diversity metrics alongside standard benchmarks, and training procedures might evolve to reduce typicality bias in preference data.

The success of Verbalized Sampling also validates an important principle: sometimes the best solutions to AI limitations come from understanding human cognition rather than just improving algorithms. By recognizing typicality bias as a cognitive phenomenon that affects training data, the team found a leverage point that purely technical approaches had missed.

This research represents more than just a better prompting method—it’s a new lens for understanding how alignment affects model behavior and a proof that inference-time interventions can unlock capabilities that training-time pressures suppress. As AI systems become more capable and more aligned, maintaining their creative potential will only grow more important.

The implications extend far beyond today’s language models. As AI systems tackle increasingly complex and open-ended problems, the ability to generate diverse hypotheses, explore alternative solutions, and avoid premature convergence on typical answers will prove essential. Verbalized Sampling offers a template for addressing these challenges that’s both theoretically grounded and practically effective.

For anyone working with AI systems, the message is clear: the models you’re using are more capable than they appear. The diversity you’re looking for already exists—you just need to know how to ask for it.

FAQs

Q1: What is mode collapse in AI models?

Mode collapse occurs when AI models repeatedly generate similar or identical responses instead of diverse outputs. This happens because alignment training causes models to favor typical, safe responses over creative variety.

Q2: How does Verbalized Sampling work?

Verbalized Sampling asks AI models to generate multiple responses along with probability scores instead of single answers. This prompting technique helps models access their full creative range rather than collapsing to stereotypical outputs.

Q3: Can I use Verbalized Sampling with existing AI models?

Yes! Verbalized Sampling works through simple prompt modifications with existing models like GPT-4, Claude, and Gemini. No special access, retraining, or technical expertise required.

Q4: Does increased diversity reduce output quality?

Surprisingly, no. Research shows Verbalized Sampling can improve both diversity and quality simultaneously, especially when combined with chain-of-thought reasoning. Some variants increased diversity by 2.1x while improving quality scores.

Q5: What causes typicality bias in AI training data?

Typicality bias stems from human cognitive tendencies to prefer familiar, easy-to-process content. When humans rate AI responses for training, they unconsciously favor conventional answers even when creative alternatives are equally good.

Q6: What are the practical applications of this research?

Verbalized Sampling improves creative writing, synthetic data generation, social simulations, hypothesis generation, open-ended question answering, and any task requiring diverse outputs from AI systems.

Q7: How much does Verbalized Sampling increase creativity?

Testing showed 1.6-2.1x increases in diversity scores across creative writing tasks, with joke writing improving from 30% to 62.5% diversity and maintaining 96%+ quality scores.