Top 5 Approaches to Addressing Today’s ChatGPT Security Risks Your Team Might Be Missing

By Lane Sullivan – SVP, Chief Information Security and Strategy Officer, Concentric AI

AI has rapidly emerged from creeping down the enterprise hallways to bursting through the boardroom doors with full force in a dramatically short period. From OpenAI’s ChatGPT to Microsoft Copilot to Google Gemini and everything in between, GenAI tools have become part of day-to-day workflows faster than security teams can possibly dream of maintaining.

You’ve probably heard about the risks associated with Copilot and Gemini, but ChatGPT warrants equal, if not more, attention. That’s because it’s the most widely used GenAI tool and is less regulated by many organizations. If left unchecked, ChatGPT could pose a serious threat to enterprise data security.

The Risks of ChatGPT Even Without Access to Your Files

GenAI tools like Copilot have integrated access to corporate emails, documents, and Teams chats. While ChatGPT does not, it’s important to understand just how often sensitive data is typed, copy/pasted, or uploaded directly into ChatGPT by well-meaning employees as part of getting their work done.

In short, ChatGPT’s security concern isn’t about what it can access; it’s more about what users share, how data is processed, and what safeguards (if any) are in place to prevent mistakes from turning into incidents.

There are several significant ways that ChatGPT introduces risks to your data including:

1. Employees don’t hesitate to enter sensitive data into ChatGPT every day, including customer emails, product roadmaps, and contract language. This data is then processed and can be retained and used to train future models. Despite OpenAI’s opt-out options, employee usage habits haven’t changed, and enterprises rarely have visibility into how AI tools are being used at the edge.

2. Attackers can weaponize ChatGPT for the creation of malware. If you are a threat actor who needs polymorphic malware or a convincing phishing campaign, look no further than ChatGPT. Even though OpenAI has added filters to prevent abuse, hackers continue to jailbreak the system. For example, they can disguise prompts as academic questions or penetration tests to generate harmful code or social engineering scripts.

3. There are now better, more convincing phishing campaigns to trick employees, all courtesy of ChatGPT. Email scams used to be easy to detect, but these days they look like legitimate and urgent messages from your HR department. ChatGPT enables attackers to personalize, localize, and perfect their outreach scheme, especially in spear-phishing and business email compromise attacks.

4. If you aspire to become a cybercriminal, look no further than an AI prompt for a free lesson. Threat actors these days use ChatGPT to study exploits, write Python scripts for scanning vulnerabilities, and test basic obfuscation techniques. ChatGPT makes cyberattacks easier and cybercriminals stronger.

5. With more vulnerable API integrations comes more attack surface to exploit. New avenues of attack are created when companies incorporate ChatGPT into internal workflows via APIs. Threat actors have easier access to core business systems because many of today’s APIs are new, rushed to market, and lack consistent security.

6. There are currently no guidelines on how ChatGPT outputs are used. ChatGPT might produce insecure code or faulty analysis, but because it seems believable, users trust it. Every output poses a potential risk because there’s no enforcement, no sandbox, and no review process unless the organization establishes one.

How Copilot Differs from ChatGPT

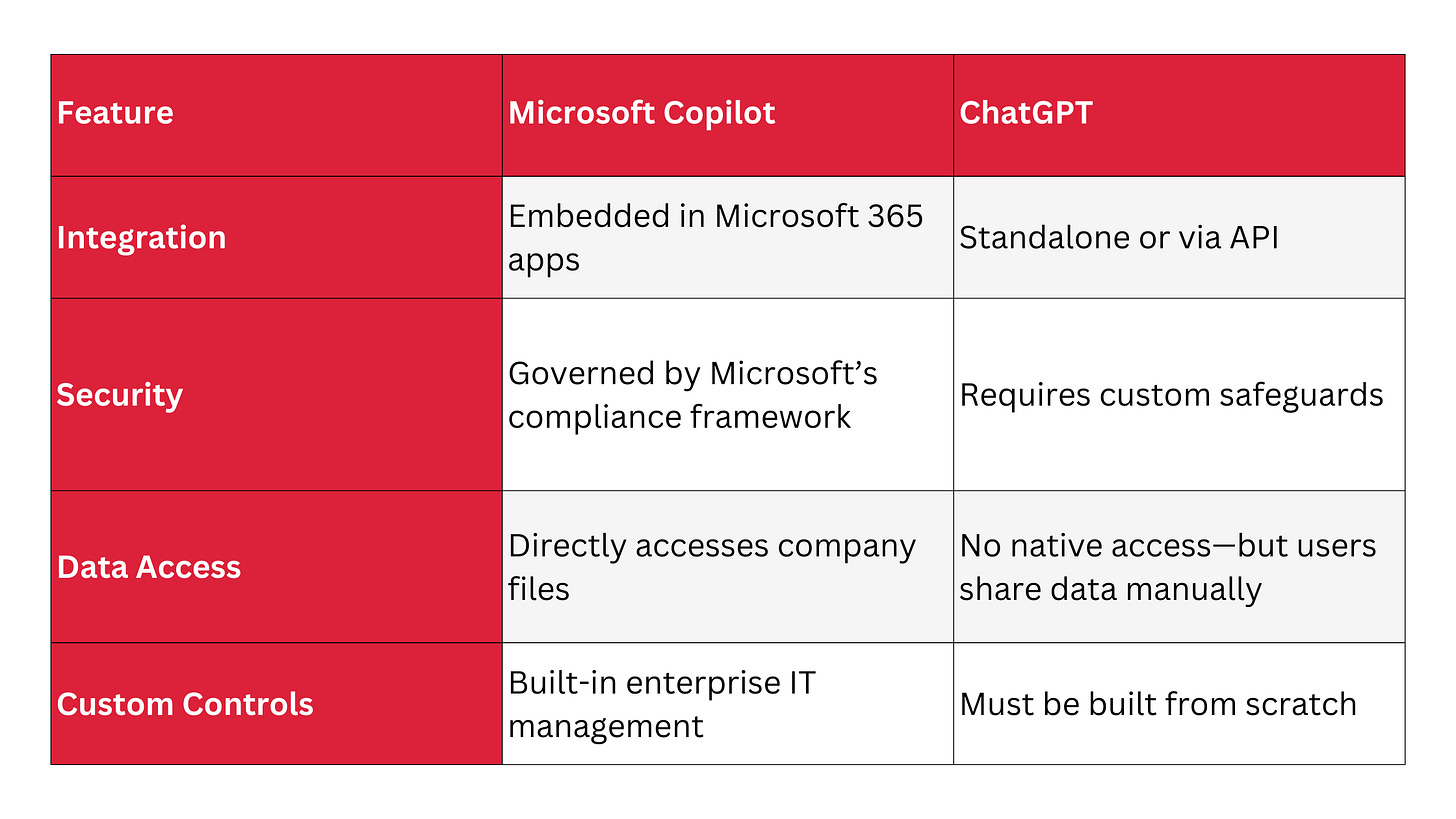

I explained earlier how Copilot and ChatGPT differ in their access to data and handling of sensitive information. However, while both tools utilize OpenAI’s models, their enterprise usage and risk levels are quite different. Essentially, Copilot is controlled, but ChatGPT is unpredictable unless you restrict it. This graphic offers a clear illustration:

Addressing ChatGPT Security Risks

It’s clear that ChatGPT wasn’t designed for enterprise use. It doesn’t adhere to security policies, respect compliance boundaries, or seek permission before handling sensitive data. However, there are more effective ways to mitigate ChatGPT security risks than simply blocking it.

It is recommended that security teams implement hidden protections that allow employees to work quickly with AI tools without jeopardizing their organizations’ security posture. This approach is far more effective than playing whack-a-mole with AI tools and bypassing AI governance. Here are the top five best practices for addressing ChatGPT security risks:

1. Control access and integrations – Use single sign-on and enforce a zero-trust policy model across endpoints to restrict access to ChatGPT. Use API gateways with OAuth 2.0 and apply encryption-in-transit data protection if you’ve deployed ChatGPT via an API.

2. Monitor AI use and flag sensitive data – Use data security tools to oversee AI-generated and user-submitted content for sensitive information. You can’t assume employees will know what’s appropriate to share. Best practice is to employ tools that can do this automatically without relying on rules, regex, or manual classifiers.

3. Deploy intelligent data loss prevention (DLP) that recognizes context and labels data based on its meaning, not its format. This ensures that even if someone pastes source code or a contract summary into ChatGPT, it gets flagged before leaving the perimeter. Avoid outdated DLP solutions, since they are known to fail when data doesn’t match expected patterns.

4. Educate employees in an engaging and frequent manner – Ensure they understand how ChatGPT works, what’s safe to share, and the risks of code reuse and hallucinations. Reinforce your training with real-world examples and internal phishing simulations to help users fully grasp the risks. Your AI policy is ineffective if users are unaware of it.

5. Plan for the worst – It’s crucial to have an AI incident response plan that includes your remediation steps, who gets notified, and what actions are needed to assess the impact if sensitive data is shared with ChatGPT. A best practice is to simulate the threat scenario now, before anything happens.

Conclusion

ChatGPT is here to stay, and its popularity will only increase. Banning it entirely won’t work because users are deeply embedded and will find ways around restrictions. Organizations need visibility, control, and the right automation to prevent sensitive data from leaking as the use of these AI tools expands.