NVIDIA Unveils Cosmos: AI Platform Creates Digital Twins of Our World

NVIDIA calls it a World Foundation Model

In a revolutionary development, NVIDIA has unveiled a powerful new platform that could revolutionize how artificial intelligence interacts with the physical world. The Cosmos World Foundation Model Platform aims to help developers create incredibly realistic digital twins of our environment - paving the way for more capable robots, self-driving cars, and other AI systems that can safely learn to navigate the real world.

At the heart of this innovation is what NVIDIA calls a "World Foundation Model" (WFM) - essentially an AI that can simulate how the physical world behaves and changes over time. By training on massive datasets of video footage, these models learn to predict how scenes will unfold and respond to different actions or events.

"Physical AI needs to be trained digitally first," explained Dr. Ying Li, lead researcher on the project. "It needs a digital twin of itself - the policy model - and a digital twin of the world - the world model. Our platform provides the building blocks for developers to create customized world models for their specific AI applications."

The potential applications are vast. Imagine a robotic arm that can practice complex manipulation tasks thousands of times in a virtual environment before ever touching a real object. Or a self-driving car AI that can encounter and learn from rare traffic scenarios without putting anyone at risk. The Cosmos platform could accelerate development across robotics, autonomous vehicles, smart cities, and more.

Key Components of the Cosmos Platform

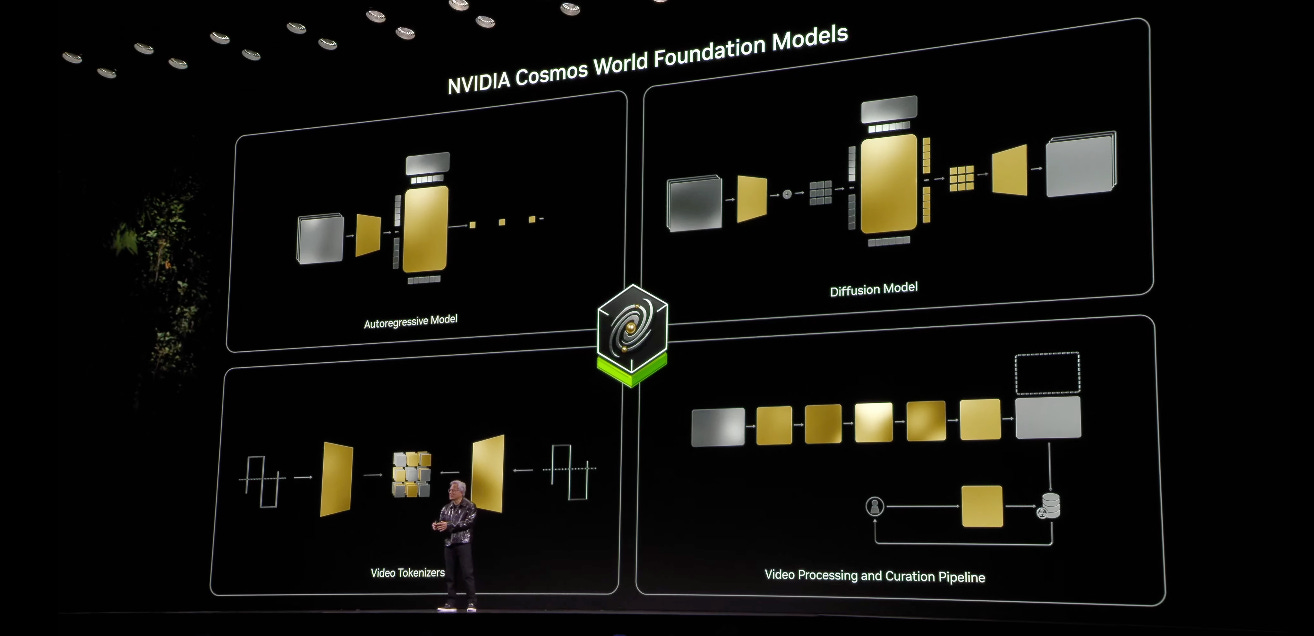

NVIDIA's new offering includes several core elements:

A video curation pipeline that processes massive datasets to extract the most useful training clips

Pre-trained world foundation models that provide a starting point for customization

Video tokenizers that efficiently compress video information

Examples of how to fine-tune models for specific applications

A "guardrail" system to prevent harmful inputs or outputs

"We're positioning world foundation models as general-purpose world models that can be fine-tuned into customized world models for downstream applications," said Li. "To help AI builders solve critical problems facing society, we're making our platform open-source and our models open-weight with permissive licenses."

This move to open up access could spur rapid innovation, allowing researchers and companies worldwide to build on NVIDIA's work. The company hopes this collaborative approach will accelerate progress in physical AI - a field that has lagged behind other areas of machine learning.

Training in the Matrix: Why Digital Worlds Matter

A key challenge in developing AI for physical tasks is the difficulty and risk of real-world training. Unlike language models that can harmlessly generate millions of practice sentences, a robot or vehicle making mistakes during training could cause damage or injury.

This is where world foundation models come in. By creating highly accurate simulations, AI agents can explore, learn, and even fail safely in digital environments. Once they've mastered tasks virtually, they can then be deployed in the real world with a much higher chance of success.

"A well-trained WFM, which models the dynamic patterns of the world based on input perturbations, can serve as a good initialization of the policy model," Li explained. "This helps address the data scarcity problem in Physical AI."

The Cosmos platform supports two main approaches to building these world models:

Diffusion models: These generate videos by gradually removing noise from random data.

Autoregressive models: These generate videos piece by piece, conditioned on what came before.

Both leverage state-of-the-art transformer architectures, similar to those used in large language models, to achieve impressive results.

From Raw Data to Digital Worlds

Creating convincing simulations of reality requires massive amounts of training data. The NVIDIA team started with a staggering 20 million hours of video footage, ranging from dashcam recordings to nature documentaries.

However, not all of this data is equally useful. Many videos contain repetitive content, low-quality segments, or artificial edits that could confuse an AI trying to learn about the physical world. To address this, the researchers developed a sophisticated video curation pipeline.

This automated system first splits long videos into individual shots without scene changes. It then applies a series of filters to identify high-quality, information-rich clips. These are annotated with descriptions generated by a visual language model. Finally, the system performs semantic deduplication to ensure a diverse but compact dataset.

"We accumulate about 20M hours of raw videos with resolutions from 720p to 4k," said Dr. Jason Chen, who led the data curation efforts. "After processing, we generate about 100 million video clips for pre-training and about 10 million for fine-tuning specific applications."

The curated dataset covers a wide range of categories relevant to physical AI, including:

Driving scenarios (11%)

Hand motions and object manipulation (16%)

Human activities (10%)

Spatial awareness and navigation (16%)

First-person perspectives (8%)

Natural phenomena (20%)

Dynamic camera movements (8%)

Synthetically rendered scenes (4%)

Other miscellaneous content (7%)

This diversity helps ensure the resulting world models can generalize to a variety of tasks and environments.

Compressing Reality: The Art of Video Tokenization

A critical challenge in working with video data is its sheer size. To make training and inference more efficient, the NVIDIA team developed novel "video tokenizers" - AI models that can compress video information while preserving its essential content.

"Video contains rich information about the visual world," Li noted. "However, to facilitate learning of the WFMs, we need to compress videos into sequences of compact tokens while maximally preserving the original contents."

The researchers explored both continuous tokens (vectors of numbers) and discrete tokens (integer values) to represent video content. Their tokenizers are designed to be causal, meaning they don't rely on future frames to encode the current moment. This aligns well with how physical AI systems experience the world in real-time.

Importantly, this causal design also allows the models to work with single images.

"This is important for the video model to leverage image datasets for training, which contain rich appearance information of the worlds and tend to be more diverse," Li explained.

From General to Specific: Customizing World Models

While the pre-trained world foundation models provide a powerful starting point, most real-world applications will require further specialization. The Cosmos platform includes examples of how to fine-tune these models for specific tasks:

Camera Control: By conditioning the model on camera pose information, developers can create navigable virtual worlds where users can freely explore generated environments.

Robotic Manipulation: Fine-tuning on datasets of robotic actions allows the model to better predict how objects will respond to manipulation.

Autonomous Driving: The team demonstrated how pre-trained models could be adapted for various driving-related tasks, potentially accelerating the development of self-driving technologies.

"To better protect the developers when using the world foundation models, we develop a powerful guardrail system," Li added. This system includes both a "pre-Guard" to block harmful inputs and a "post-Guard" to prevent problematic outputs.

The Road Ahead: Challenges and Potential

While the Cosmos platform represents a significant step forward, the researchers acknowledge that creating truly comprehensive world models remains an enormous challenge.

"Additional research is required to advance the state-of-the-art further," Li cautioned. Some key areas for improvement include:

Handling longer time horizons and more complex causal relationships

Improving the physical accuracy of simulations, especially for edge cases

Reducing computational requirements for training and inference

Ensuring models can generalize to novel scenarios not seen in training data

Despite these challenges, the potential impact of this technology is immense. World foundation models could accelerate progress across a wide range of fields:

Robotics: Faster development of more capable and flexible robots for manufacturing, healthcare, and home assistance.

Autonomous vehicles: Improved safety and decision-making in complex traffic scenarios.

Virtual and augmented reality: More realistic and interactive digital environments.

Scientific simulations: Better models for studying climate change, molecular interactions, and other complex systems.

Smart cities: Enhanced urban planning and management through accurate digital twins.

"We believe a WFM is useful to Physical AI builders in many ways," Li enthused. Beyond direct applications, these models could serve as valuable tools for policy evaluation, initializing AI agents, reinforcement learning, planning, and generating synthetic training data.

The open nature of the Cosmos platform means its full potential may only become apparent as researchers and developers worldwide begin to experiment with and build upon it.

A New Era for AI and the Physical World

NVIDIA's Cosmos World Foundation Model Platform represents a significant milestone in bridging the gap between AI and the physical world. By providing powerful tools to create realistic digital simulations, it could dramatically accelerate the development of AI systems capable of understanding and interacting with our environment.

As these technologies mature, we may see a new generation of robots, vehicles, and other AI-powered systems that can navigate the complexities of the real world with unprecedented skill and adaptability. From more efficient factories to safer roads and smarter cities, the impact could be felt across nearly every aspect of our lives.

However, as with any powerful new technology, careful consideration of ethical implications and potential risks will be crucial. Ensuring these world models are developed and deployed responsibly will be an ongoing challenge for the AI community.

For now, NVIDIA has taken an important first step by making their platform open and accessible. As researchers and developers around the globe begin to explore its possibilities, we may be witnessing the dawn of a new era in the relationship between artificial intelligence and the physical world around us.