Microsoft Launches Azure AI Content Safety Service to Boost Online Well-Being

With the growing tide of user-generated and AI-produced content online, keeping harmful materials out of platforms and applications has become a pressing challenge. Now, Microsoft is arming organizations with a powerful new tool to help moderate content and curb the spread of unsafe materials across the digital landscape.

The tech giant has officially launched Azure AI Content Safety, a service utilizing cutting-edge artificial intelligence to detect and filter offensive, risky or undesirable content in text and images. Powered by neural networks trained on massive datasets, the service scans inputs and assigns severity scores to flag anything from crude language to adult themes, violence or certain types of speech.

According to Microsoft product manager Louise Han, focusing on online safety through content moderation is key to "creating a safer digital environment that promotes responsible use of AI." Han notes the growing importance of filtering not just human posts but also AI outputs, to uphold ethical standards and build trust in emerging technologies.

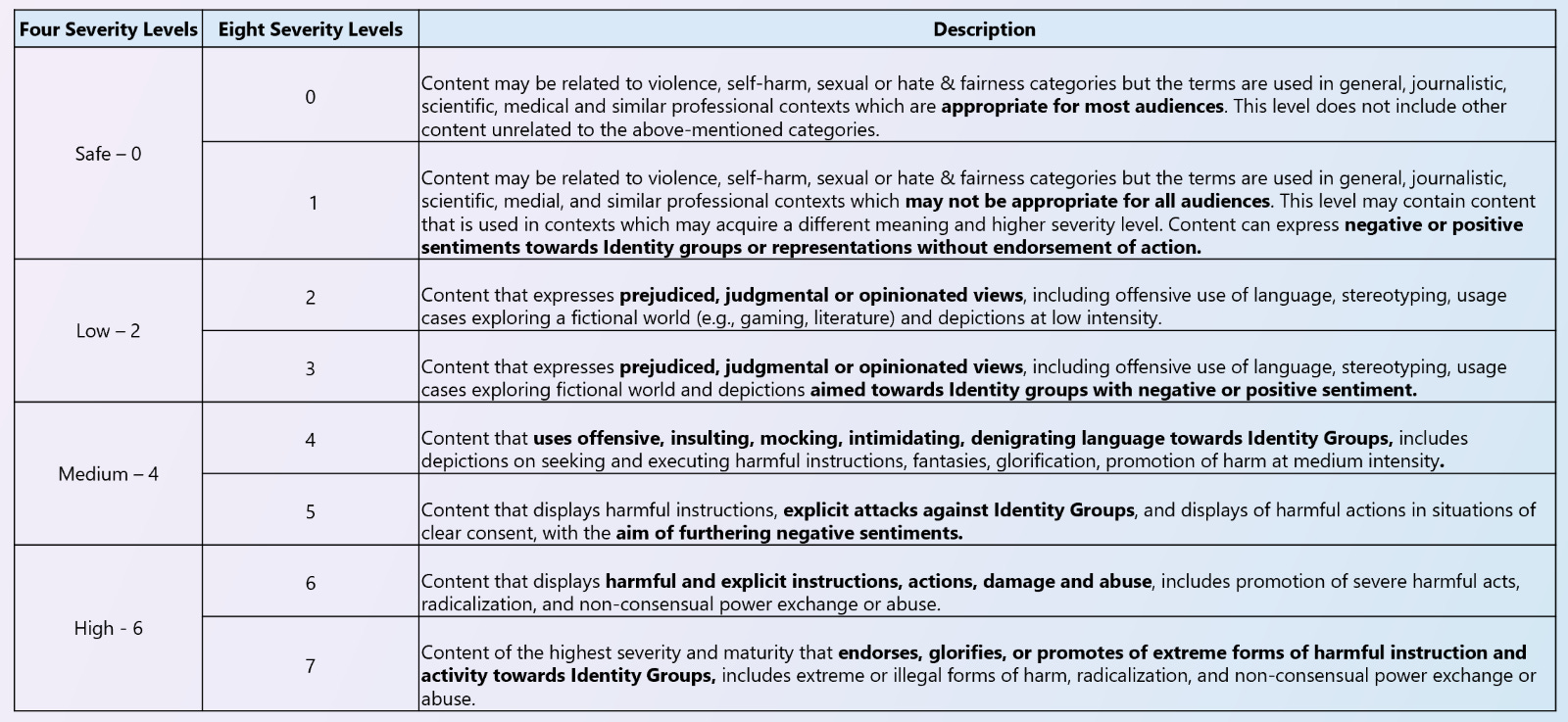

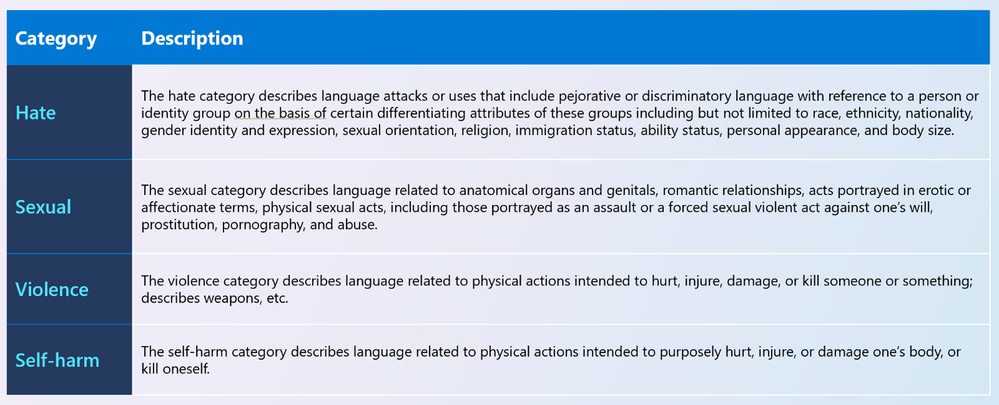

Equipped to handle a wide range of languages and categories like hate, violence or self-harm, Azure AI Content Safety aims to provide 360-degree protection. Its image recognition capabilities analyze visuals while its text filters root out written threats. The service also grades identified issues on a scale of zero to seven based on severity.

Priced affordably to fit diverse security budgets, Azure AI Content Safety gives organizations a scalable solution to help moderate user interactions and automated outputs at the critical intersection of AI, online platforms and public well-being. As Han notes, getting content safety right protects users from potential harms while fostering responsible innovation - a win-win in the era of ascendant artificial intelligence.