Anthropic Raises the Bar for Safe and Helpful AI With New Claude Models

Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus

AI safety startup Anthropic has taken another step to push the boundaries of beneficial artificial intelligence with the release of advanced new models for its popular Claude chatbot.

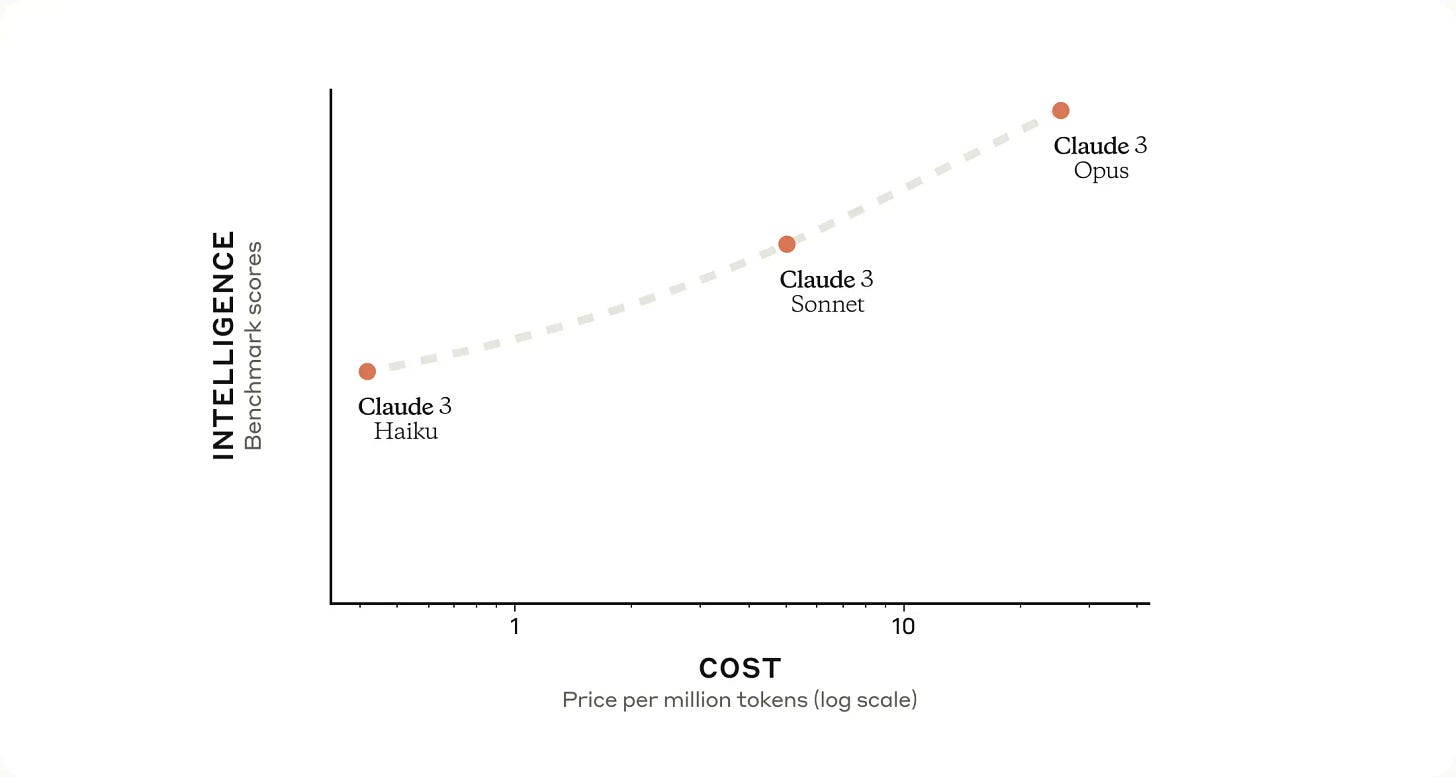

Dubbed Opus, Sonnet and Haiku, the three generative pre-trained models demonstrate state-of-the-art capabilities in conversational abilities and human-like responses. Anthropic claims the models surpass existing benchmarks for mathematics and other domains while addressing past issues with AI control and content generation.

Founded with a mission to develop AI that benefits humanity, Anthropic has focused on implementing strong guardrails to ensure its technology stays helpful, harmless and honest. The new models refine this approach, reducing unnecessary refusals to answer while maintaining strict controls.

Opus is described as the most capable of the trio, able to carry on natural discussions through deft topic switching and follow-up queries. Sonnet and Haiku showcase refined language abilities for discussions involving poetry, quotes and succinct replies.

The releases aim to strengthen Claude's position versus rapid progress made by rivals like ChatGPT and Gemini. Anthropic believes its balance of capability and caution sets the standard for responsible development of powerful conversational AI.

While legal issues over copyright persist across the industry, Anthropic's new models double down on its mission to advance AI for good through collaborative research with partners like AWS and prudent safeguards limiting unwanted behaviors. As the field evolves, Anthropic is committed to keeping humans firmly in control.

All AI jew shit drops from the same LLM tree

Don't let them fool you otherwise

Long ago "Claude" meant dirt-clod, today it means a gay guy dumber than dried mud