AI-Powered Trust: A New Framework for Collaborative Systems

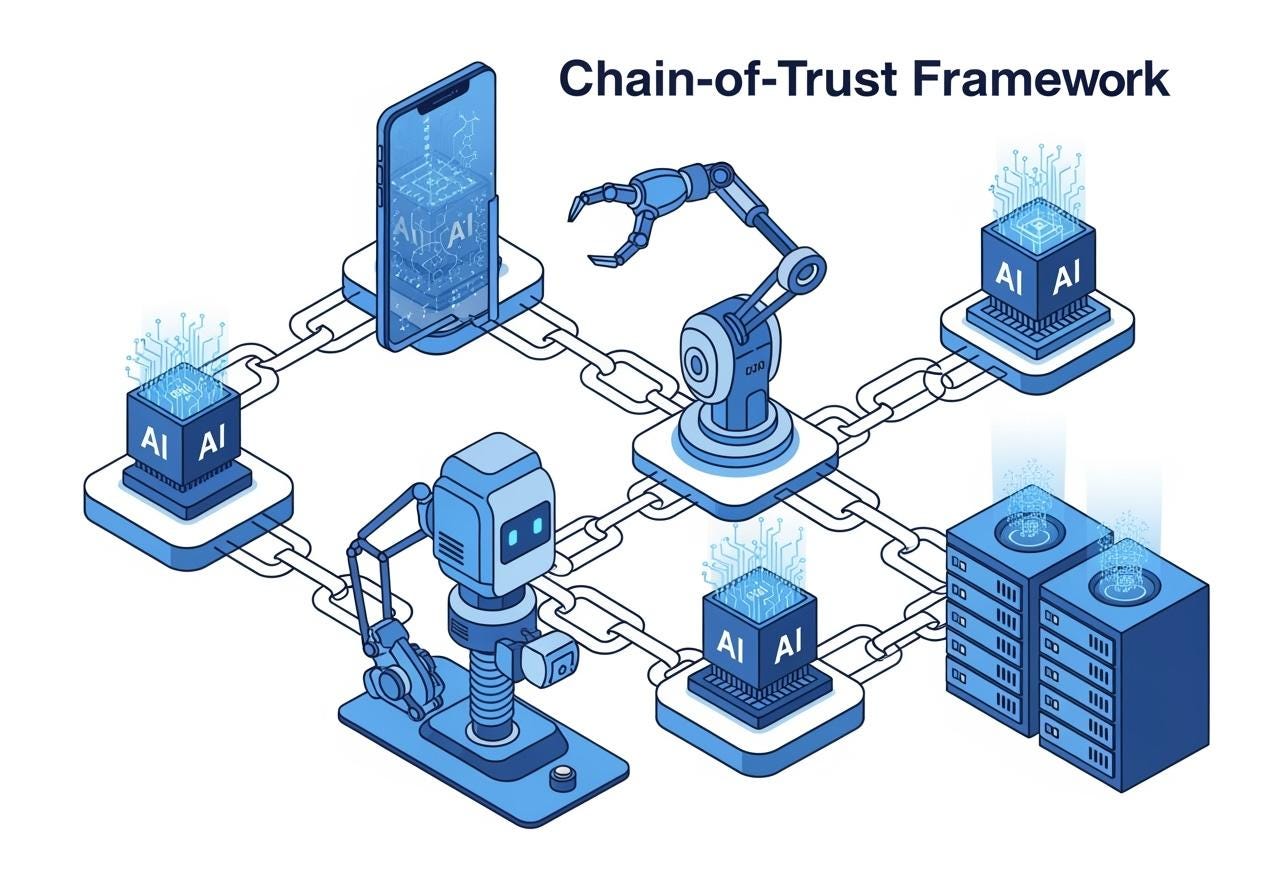

In an era where devices increasingly need to work together to handle complex tasks, a new approach to trust evaluation could transform how we select partners for collaboration. Researchers have developed a groundbreaking framework called "Chain-of-Trust" that uses artificial intelligence to assess which devices can be trusted for specific tasks.

Imagine you need to create a 3D map from photos, but your smartphone lacks the processing power. How do you know which nearby devices—like robots, servers, or other phones—can be trusted to help? This new system tackles that challenge by breaking down trust into stages, checking different aspects of potential collaborators one by one.

The research team from Western University, University of Glasgow, and University of Waterloo has shown that their approach achieves up to 92% accuracy in identifying trustworthy collaborators—far better than previous methods. Their work addresses a critical need as our world becomes more interconnected, with devices increasingly dependent on each other to complete resource-intensive tasks.

"Traditional trust evaluation methods try to collect all information at once, which is inefficient and often impossible in real-world networks," explains the research team. "Our approach collects only what's needed at each stage, making better use of limited resources while still ensuring tasks are completed reliably."

The system works like a multi-stage interview process for devices, first checking if they offer the right services, then evaluating their communication capabilities, computing resources, and finally their history of delivering results honestly. At each stage, only the most promising candidates advance to the next round of evaluation.

What makes this approach particularly powerful is its use of generative AI to understand both the requirements of tasks and the capabilities of potential collaborators. This allows for more nuanced and accurate trust assessments than conventional methods that rely on fixed metrics or simplistic security checks.

As our devices become more interconnected and tasks grow more complex, this progressive approach to trust evaluation could become essential infrastructure for the collaborative systems of tomorrow.

The Trust Challenge in Collaborative Computing

In today's increasingly connected world, individual devices often lack the computational power, energy, or specialized capabilities needed to handle complex tasks on their own. This limitation has given rise to collaborative computing systems, where multiple devices work together to accomplish what would be impossible for a single device.

These collaborative systems are already transforming industries like manufacturing, healthcare, and smart cities, providing high-quality services with minimal delays. However, a critical challenge remains: how to identify which devices can be trusted to collaborate effectively.

"Trust in collaborative systems isn't just about security," notes the research team. "It encompasses whether a device has the right capabilities, sufficient resources, and a history of reliable performance for a specific task."

Traditional approaches to trust have significant limitations. Many equate trust solely with security, focusing on authentication mechanisms or blockchain technology while ignoring other crucial factors like available computing power or communication bandwidth. Others treat trust as a fixed relationship between devices, failing to recognize that trustworthiness varies depending on the specific task at hand.

Perhaps most importantly, conventional methods assume all information about potential collaborators can be collected simultaneously—an assumption that rarely holds true in real-world networks where data arrives at different times due to varying network conditions.

The Chain-of-Trust framework addresses these limitations by taking a progressive, task-specific approach to trust evaluation, collecting only the most relevant information at each stage of assessment.

How Chain-of-Trust Works

The Chain-of-Trust framework divides trust evaluation into multiple sequential stages, with each stage focusing on a specific aspect of trustworthiness:

Task Requirement Decomposition: The system analyzes what the task requires in terms of services, resources, and security levels.

Service Availability Evaluation: The system checks which devices can provide the specific services needed for the task.

Communication Resource Evaluation: For devices that offer the right services, the system evaluates whether they have sufficient communication capabilities (bandwidth, security) to receive the task data.

Computing Resource Evaluation: Devices that pass the communication check are then evaluated on their computing resources and security to ensure they can process the task effectively.

Result Delivery Evaluation: Finally, the system assesses whether the remaining devices have a history of honestly delivering results.

Only devices that successfully pass all stages are considered fully trustworthy for the specific task.

"What makes our approach unique is that we don't try to collect all information at once," the researchers explain. "Instead, we progressively gather just what we need at each stage, which is much more efficient and realistic in actual networks where information updates at different rates."

This staged approach offers several advantages. It reduces the complexity of trust evaluation by breaking it into manageable steps. It allows for more efficient resource use, as only relevant information is collected at each stage. And it enables more accurate assessment by focusing on the specific requirements of each task.

The AI Advantage

A key innovation in the Chain-of-Trust framework is its use of generative AI to power the trust evaluation process. The researchers implemented their system using large language models (LLMs) like GPT-4, taking advantage of several capabilities that make these models well-suited for trust assessment:

In-context learning: The AI can understand complex scenarios and interpret nuanced meanings, allowing it to analyze task requirements and device capabilities in depth.

Few-shot learning: The system can adapt to new types of tasks with minimal examples, eliminating the need for extensive training data.

Chain-of-thought reasoning: The AI can break down complex evaluations into logical steps, improving the accuracy of trust assessments.

"Traditional trust evaluation methods struggle with the complexity and variability of modern collaborative tasks," the research team notes. "Generative AI gives us the flexibility to adapt to different task requirements and understand the capabilities of diverse devices."

In their experiments, the researchers found that their AI-powered Chain-of-Trust approach achieved significantly higher accuracy than alternative methods. When implemented with GPT-4o, the system correctly identified trustworthy devices 92% of the time, compared to just 64% for a simpler chain-of-thought approach and 35% for standard methods.

Real-World Applications

To demonstrate their framework, the researchers built a collaborative system consisting of various devices: Google Pixel 8 phones, DELL servers, Rosbot Plus and Robofleet robots, and Lambda GPU workstations.

In one example scenario, a device needed to complete a 3D mapping task quickly and securely using several photos. The Chain-of-Trust system analyzed this request and broke it down into specific requirements: the need for 3D mapping services, secure and fast communication capabilities, powerful and secure computing resources, and reliable result delivery.

The system then progressively evaluated the 20 available devices:

First, it identified 17 devices that supported 3D mapping services.

Of these, 10 had sufficient communication capabilities for secure and fast task transmission.

Among those 10, seven had the computing power and security needed for effective task execution.

Finally, three devices (identified as a8, a10, and a11 in the study) had proven histories of honestly delivering results.

These three devices were ultimately recommended as trustworthy collaborators for the 3D mapping task.

This example shows how the Chain-of-Trust framework can adapt to specific task requirements and progressively narrow down the pool of potential collaborators to find the most trustworthy options.

Implications for Future Systems

The Chain-of-Trust framework represents a significant advance in how we approach trust in collaborative systems. By taking a progressive, task-specific approach powered by generative AI, it addresses many limitations of traditional trust evaluation methods.

This approach could have far-reaching implications for various domains:

Edge Computing: As computing moves closer to data sources at the network edge, effective collaboration between nearby devices becomes essential. Chain-of-Trust could help identify reliable edge devices for specific tasks.

Internet of Things (IoT): With billions of connected devices, many with limited capabilities, collaborative approaches are necessary. This framework could enable more effective collaboration among IoT devices.

Autonomous Systems: Self-driving vehicles, drones, and robots often need to collaborate with other systems. Trust evaluation is critical for ensuring safe and effective cooperation.

Distributed Computing: Tasks that require massive computational resources can be distributed across multiple devices. Chain-of-Trust could help identify the most reliable nodes for different aspects of complex computations.

"As our world becomes more interconnected and tasks grow more complex, the ability to quickly and accurately evaluate trust becomes increasingly important," the researchers conclude. "Our Chain-of-Trust framework provides a flexible, efficient approach that can adapt to diverse tasks and changing conditions."

By breaking down trust evaluation into manageable stages and leveraging the power of generative AI, this new framework takes an important step toward more reliable and effective collaborative systems—systems that will increasingly form the backbone of our technological future.

Looking Ahead

While the Chain-of-Trust framework shows promising results, the researchers acknowledge that there's still work to be done. Future research could explore how to handle more dynamic environments where device capabilities change rapidly, or how to incorporate additional factors into trust evaluation.

Nevertheless, this work represents an important advance in our approach to trust in collaborative systems. By recognizing that trust is task-specific, that information arrives asynchronously, and that evaluation can be broken down into stages, the Chain-of-Trust framework offers a more realistic and effective approach to identifying trustworthy collaborators.

As our technological landscape continues to evolve toward more distributed and collaborative systems, frameworks like Chain-of-Trust will play an increasingly vital role in ensuring that these systems operate reliably, securely, and effectively.