AI Journalists: How Well Can Machines Write the News?

In an era where artificial intelligence is reshaping countless industries, journalism stands as one of the most fascinating frontiers. The craft of reporting news—with its demands for accuracy, context, narrative skill, and ethical judgment—has long been considered quintessentially human. But what happens when AI steps into the newsroom? Can machines truly function as journalists?

A recent study from researchers at National Yang Ming Chiao Tung University and Sony Group Corporation tackles this question head-on. Their benchmark, called NEWSAGENT, puts AI systems through a rigorous test: can they perform the complex, multi-step process of gathering information, selecting relevant context, and crafting a coherent news story—just as human journalists do?

The results are both surprising and nuanced. In some dimensions, today's most advanced AI systems can actually outperform human journalists in crafting news articles. Yet in others, they still fall noticeably short. This research doesn't just measure how well machines write—it illuminates the very nature of journalism itself and raises profound questions about the future of news creation.

"Unlike typical summarization or retrieval tasks, essential context is not directly available and must be actively discovered, reflecting the information gaps faced in real-world news writing," explain the researchers. This distinction is crucial. Rather than simply repackaging pre-assembled information, NEWSAGENT forces AI to work with incomplete data and actively search for missing context—mirroring the actual workflow of professional journalists.

The study arrives at a pivotal moment. News organizations worldwide face economic pressures and resource constraints, while misinformation proliferates online. Could AI assistants help address these challenges by augmenting human journalists' capabilities? Or might they eventually replace certain journalistic functions altogether? The answers depend largely on how well these systems can perform the full spectrum of a journalist's cognitive tasks—from information gathering to narrative construction.

How NEWSAGENT Works: A Realistic Test of AI Journalism

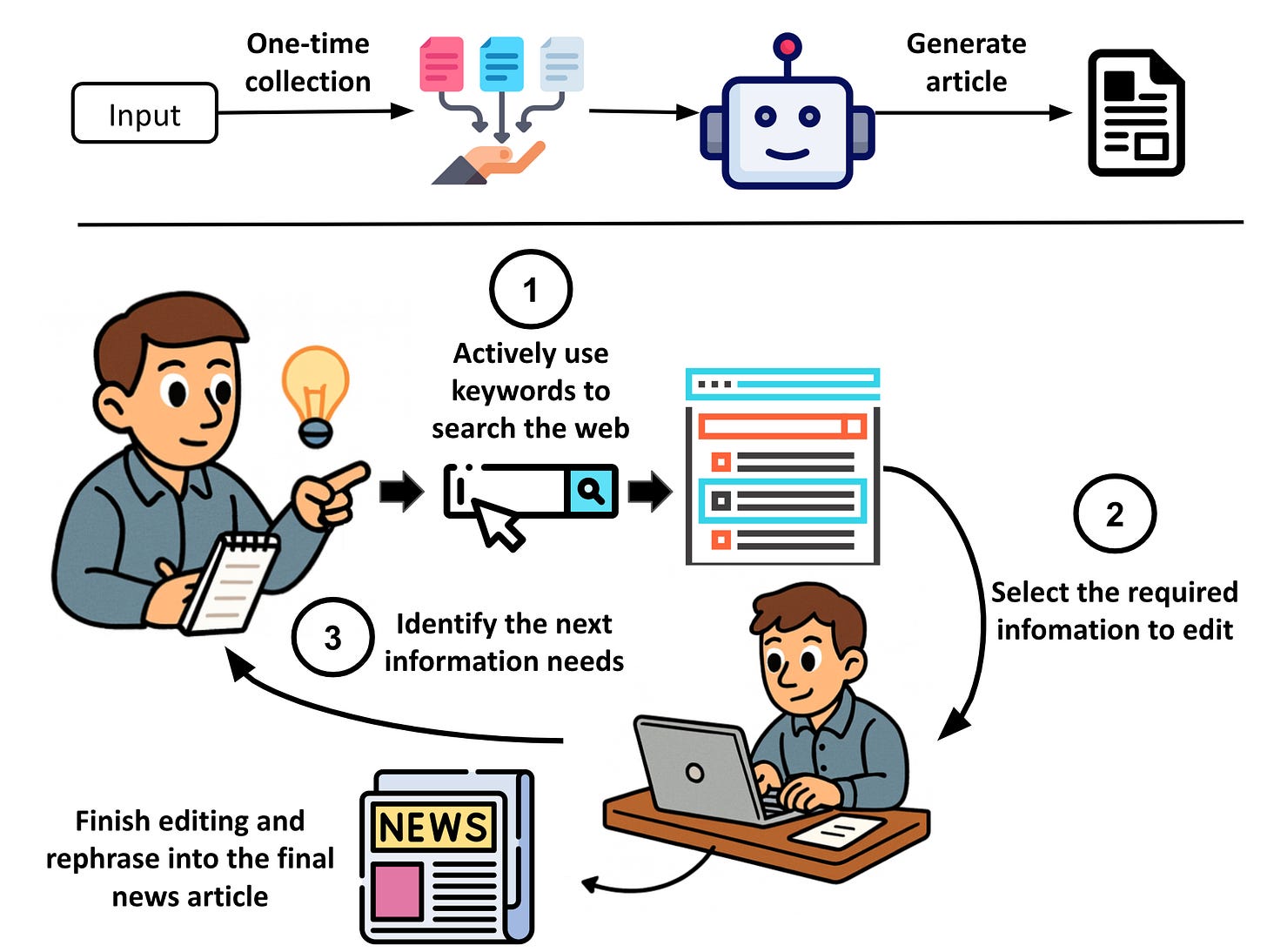

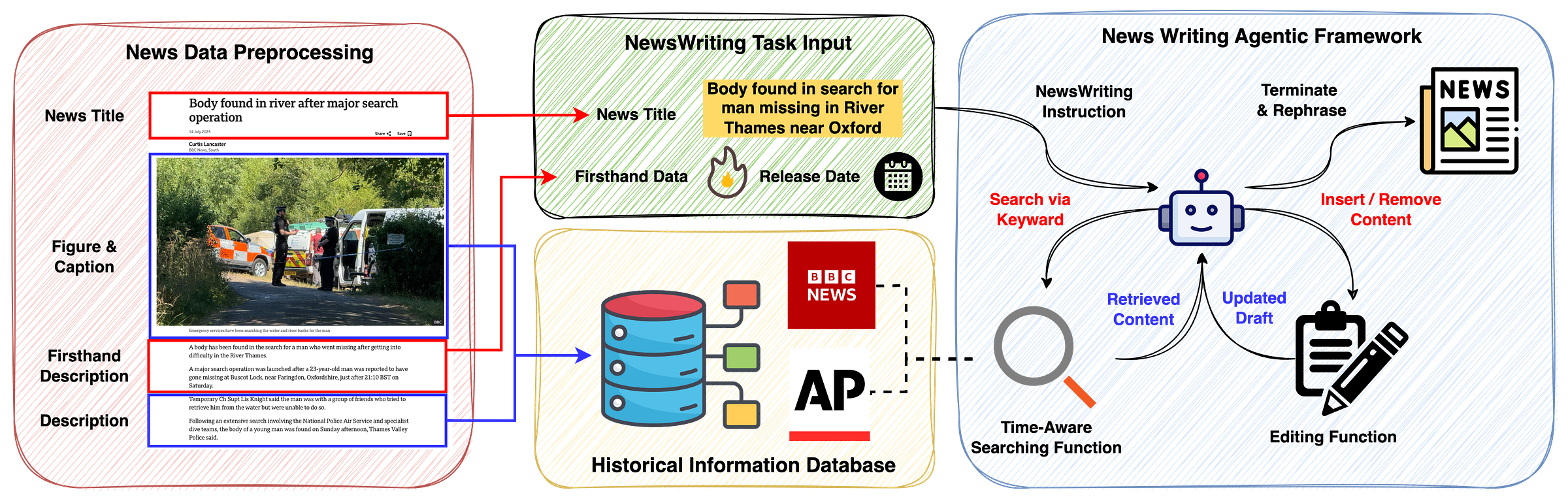

The NEWSAGENT benchmark represents a significant departure from previous evaluations of AI writing capabilities. Rather than testing a model's ability to summarize or generate text from complete information, it simulates the actual workflow of a journalist starting with limited firsthand data.

"Given a writing instruction and firsthand data as how a journalist initiates a news draft, agents are tasked to identify narrative perspectives, issue keyword-based queries, retrieve historical background, and generate complete articles," the researchers explain.

This approach mirrors reality. When covering breaking news, journalists rarely have all relevant information at their fingertips. Instead, they begin with partial data—perhaps an eyewitness account, a press release, or a few photographs—and must actively seek additional context to craft a complete story.

The benchmark includes 6,237 human-verified examples derived from real news articles published by BBC and AP News between June and July 2025. Each example consists of:

A news title and release date

Firsthand information (descriptions, image captions, and transcripts)

Historical information (background context published before the release date)

When evaluated on NEWSAGENT, AI systems must:

Start with only the title, release date, and firsthand information

Search for relevant historical context using keyword queries

Select which information to include in the draft

Edit the draft by inserting or removing content

Rephrase the completed draft into a final news article

This process involves two core functions: a time-aware search function that retrieves historical information published before the release date, and an editing function that allows for incremental refinement of the draft.

Dr. James Morton, a journalism professor at Columbia University who wasn't involved in the study, calls this approach "remarkably thoughtful."

"What makes journalism challenging isn't just writing well—it's knowing what information to seek out, what questions to ask, and how to weave disparate facts into a coherent narrative," Morton says. "This benchmark captures those dimensions in a way previous evaluations haven't."

The Contenders: Which AI Models Were Tested?

The researchers evaluated five leading AI language models, including both closed-source commercial systems and open-source alternatives:

GPT-4o (OpenAI)

GPT-4o mini (OpenAI)

Gemma-3-27b-it (Google)

Qwen3-32B (Alibaba)

Llama-4-Scout-17B-16E-Instruct (Meta)

These models represent the state-of-the-art in AI language generation as of late 2024 and early 2025. To ensure fair comparison, all models were tested using the same framework—ReAct, which allows them to interleave reasoning with actions like searching and editing.

The researchers also implemented two execution modes:

A 1-step setting where the AI directly executes operations in a single step

A 2-step setting where the AI first selects an operation and then specifies the details

This dual approach helped accommodate differences in how various models handle complex instructions, while also revealing interesting patterns in how breaking down tasks affects performance.

Surprising Results: AI Sometimes Outperforms Human Journalists

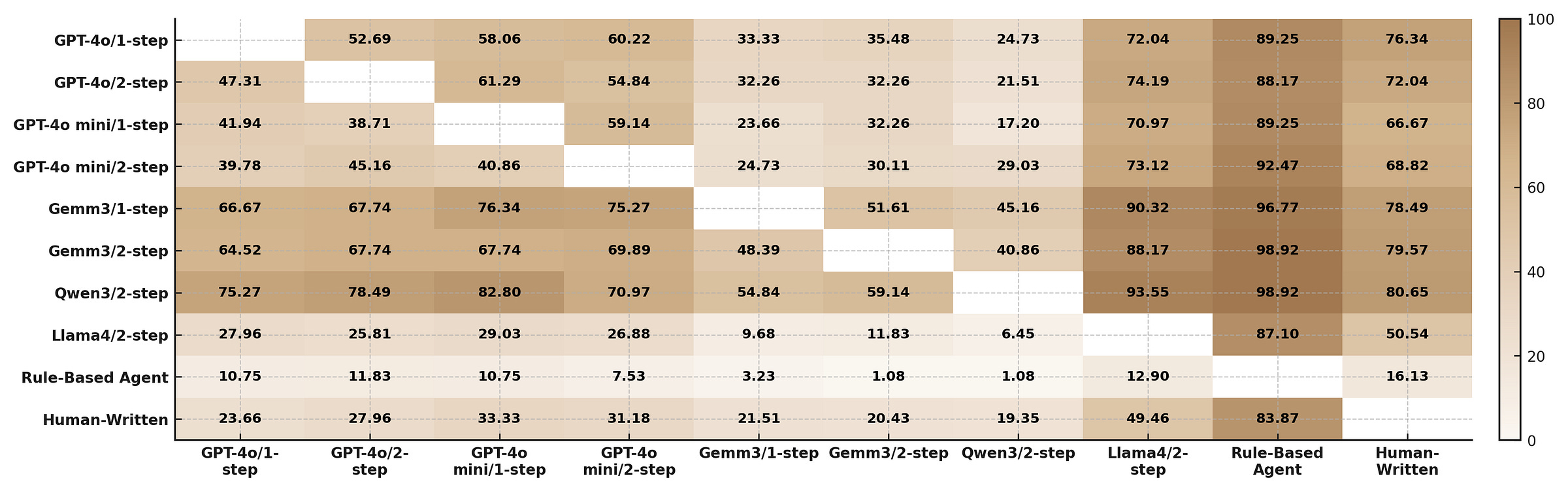

Perhaps the most striking finding is that AI-generated news articles weren't consistently inferior to human-written ones. In fact, in head-to-head comparisons, some AI systems produced articles that evaluators preferred over those written by professional journalists.

Qwen3-32B, an open-source model from Alibaba, emerged as the top performer overall. Even more surprisingly, this open-source model outperformed OpenAI's GPT-4o in several key dimensions of journalistic quality.

When comparing AI-generated articles against human-written ones, the researchers found that:

Human articles excelled in factual consistency and objectivity

AI articles often scored higher in readability and journalistic style

AI systems tended to incorporate more historical context and background information

"This doesn't mean AI is 'better' at journalism in any absolute sense," cautions lead researcher Yen-Che Chien. "Rather, it suggests that different approaches to news writing have different strengths. Human journalists often focus on concise factual delivery, while AI systems may create richer narrative continuity by integrating more historical context."

A side-by-side comparison revealed these differences clearly. When covering the same football match, a human BBC journalist produced a concise, fact-focused article, while Qwen3-32B created a more contextually rich narrative that incorporated additional historical details about the teams and players.

The researchers evaluated articles across six dimensions:

Factual consistency

Logical consistency

Importance (conveying significant information)

Readability

Objectivity

Journalistic style

This multidimensional approach revealed nuanced patterns in performance. GPT-4o excelled in readability but lagged in journalistic style, while Qwen3-32B showed particular strength in importance and journalistic style.

The Achilles' Heel: Where AI Journalists Still Fall Short

Despite these impressive results, the study identified several critical limitations in current AI journalistic capabilities.

Most notably, AI systems showed almost no self-correction during the editing process. Across all models and settings, the "Remove" function—which allows deleting content from a draft—was never used. This stands in stark contrast to human journalists, who routinely refine and prune their narratives through iterative review.

"This behavior likely stems from the absence of explicit error signals in newswriting tasks," the researchers explain. "Unlike in reasoning benchmarks where incorrect answers can be directly verified, journalistic workflows rarely provide clear failure feedback."

Another significant finding was the divergence between information selected by AI systems versus human journalists. When measured against the content chosen by human journalists in ground-truth articles, AI systems showed low F1 scores (a measure combining precision and recall), indicating they prioritized different information.

This difference doesn't necessarily indicate lower quality—as the head-to-head comparisons showed, articles with different information selection could still be judged superior overall. However, it does highlight that AI systems approach news construction differently than human journalists.

The study also revealed interesting trade-offs in how AI systems search for and select information:

The 2-step execution mode generally increased precision but reduced recall, suggesting that breaking down the search process narrows focus to highly relevant items while potentially missing useful context

Models varied widely in search-edit efficiency, with some conducting many searches but few insertions, while others showed more balanced patterns

Open-source models sometimes outperformed closed-source ones, challenging the assumption that greater general reasoning capability necessarily translates to better performance in specialized tasks

Implications: The Future of AI in Journalism

The NEWSAGENT benchmark offers valuable insights not just for AI researchers, but for news organizations considering how to integrate these technologies into their workflows.

"This study suggests AI could serve as a powerful complement to human journalism rather than a replacement," says media analyst Sarah Chen. "The AI systems excelled at integrating historical context and creating narrative flow—tasks that are time-consuming for human journalists but add significant value for readers."

For newsrooms facing resource constraints, AI assistants could potentially handle certain types of stories or aspects of reporting, freeing human journalists to focus on investigative work, exclusive interviews, and complex analysis that still exceed AI capabilities.

However, the study also highlights important limitations that must be addressed before widespread adoption:

The lack of self-correction suggests AI systems may need explicit feedback mechanisms or human oversight to identify and remove problematic content

The divergence in information selection raises questions about editorial judgment—who decides which facts are most important to include?

Current AI systems operate as single agents, whereas real newsrooms benefit from collaboration among reporters, editors, and fact-checkers

The researchers suggest two promising directions for future work. First, extending NEWSAGENT to include native multi-modal capabilities, allowing AI to directly process images, videos, and audio rather than relying on text descriptions. Second, exploring more sophisticated frameworks that enable specialized agents—fact-checkers, editors, retrieval agents—to collaborate, mirroring the structure of professional newsrooms.

Methodology: How the Evaluation Worked

The NEWSAGENT benchmark represents a significant methodological advance in evaluating AI writing capabilities. Rather than relying on simple metrics like ROUGE scores (which measure word overlap with reference texts), the researchers implemented a dimension-wise comparative evaluation using GPT-4.

For each comparison, GPT-4 assessed two candidate articles across six dimensions (factual consistency, logical consistency, importance, readability, objectivity, and journalistic style), providing a preference and brief justification for each dimension before synthesizing these into an overall judgment.

To validate this approach, the researchers compared it against human judgments on a sample of 120 article pairs, finding that their dimension-wise chain-of-thought approach achieved 72% agreement with human preferences—substantially higher than the 53% achieved by standard single-turn evaluations.

The benchmark also included function-wise metrics to assess each core capability independently:

For Search, the system compared retrieved content against the ground truth set of relevant sentences

For Edit, it measured whether the correct information was retained in the draft after editing actions

These detailed measurements allowed the researchers to pinpoint specific strengths and weaknesses in current AI systems, providing a roadmap for future improvements.

Ethical Considerations and Future Challenges

While the NEWSAGENT benchmark focuses on technical capabilities, it also raises important ethical questions about the role of AI in journalism.

The researchers acknowledge that news data can contain sensitive topics, and models trained on such content may reflect biases present in the original sources. Generated outputs could potentially misrepresent facts or produce misleading narratives, especially when incorporating historical context.

Moreover, the ability to automate newswriting at scale raises risks of misuse, such as generating persuasive misinformation or agenda-driven narratives. The researchers recommend future work include bias detection, source transparency mechanisms, and safeguards against adversarial prompts.

Beyond these concerns, practical challenges remain for AI journalism. Current systems lack the ability to conduct interviews, build relationships with sources, or bring personal expertise to specialized topics. They also cannot make the ethical judgments that define responsible journalism—decisions about which stories to pursue, which sources to trust, and how to balance public interest against potential harm.

Conclusion: A New Chapter in AI and Journalism

The NEWSAGENT benchmark represents a significant step forward in understanding how AI systems can perform journalistic tasks. By simulating the realistic workflow of news creation—from limited firsthand data to complete articles—it provides a more meaningful assessment than previous text generation benchmarks.

The results suggest both promise and limitations. Today's most advanced AI systems can produce news articles that sometimes surpass human-written ones in readability and narrative richness. Yet they still lack critical capabilities in self-correction and editorial judgment.

As AI continues to advance, the relationship between technology and journalism will likely evolve in complex ways. Rather than a simple story of replacement, we may see new hybrid forms emerge, with AI handling certain aspects of news production while humans focus on others.

"The question isn't whether AI will transform journalism—it already is," concludes media futurist David Park. "The question is how we shape that transformation to preserve what's most valuable about journalism: its commitment to truth, its ethical foundations, and its essential role in democratic societies."

The NEWSAGENT benchmark provides a valuable tool for navigating this transformation, helping both researchers and news organizations understand the current capabilities and limitations of AI journalism—and pointing the way toward more sophisticated systems that could one day work alongside human reporters in the newsrooms of the future.