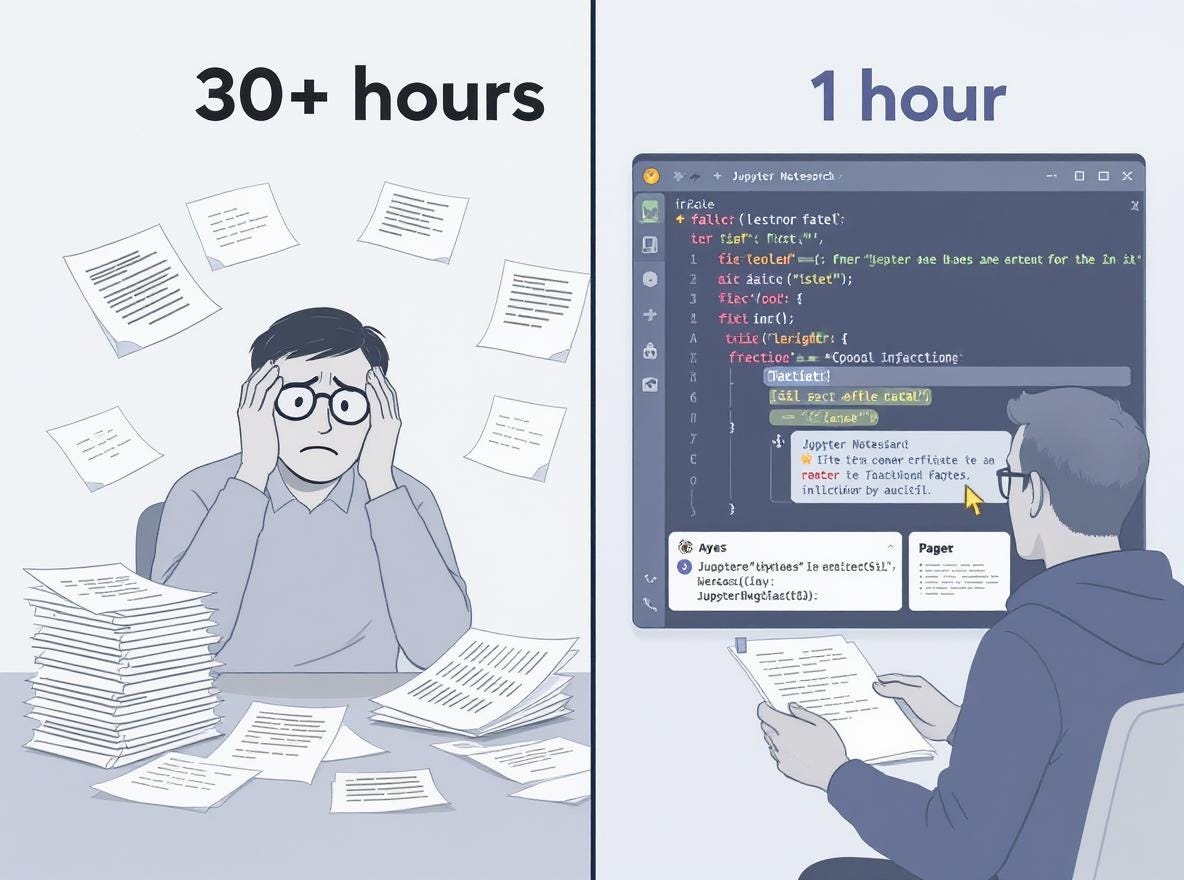

AI Assistants Slash Scientific Reproduction Time from 30 Hours to Just One

In a breakthrough for open science, researchers have developed an AI-powered platform that dramatically cuts the time needed to reproduce scientific studies. The new system, called OpenPub, features specialized "copilots" that guide both authors and readers through the complex process of scientific reproduction.

The research team, led by scientists from InferLink Corporation and the University of Southern California, demonstrated that their AI assistant could reduce the time needed to reproduce a scientific paper from over 30 hours to just one hour. This remarkable efficiency gain could help address what many consider a crisis in scientific reproducibility.

Scientific reproduction—the ability to independently verify published research findings—has long been a cornerstone of the scientific method. Yet despite growing emphasis on open science practices, many published studies remain difficult or impossible to reproduce due to missing information, unclear methods, or inaccessible data.

"The scientific enterprise depends on accurate, truthful communication of technical results," the researchers write in their paper published on arXiv. "However, the practice of open science can require additional time and work by researchers, such as documenting code and data so that analyses can be reproduced."

This is where AI can make a significant difference. The OpenPub system analyzes research papers, code, and supplementary materials to identify gaps that would hinder reproduction. It then generates structured guidance for both authors (highlighting what information they need to add) and readers (providing step-by-step notebooks to guide them through the reproduction process).

The system's dual-path design represents a novel approach to scientific communication—treating authors and readers as two sides of the same coin, connected by the shared goal of transparent, verifiable research. By focusing on practical barriers to reproduction like missing hyperparameters, undocumented preprocessing steps, and incomplete datasets, OpenPub addresses the most common obstacles that typically force researchers to spend dozens of hours deciphering and reconstructing others' work.

As funding agencies and publishers increasingly require open science practices, tools like OpenPub could help researchers meet these requirements without dramatically increasing their workload—potentially transforming how scientific knowledge is communicated and verified.

How OpenPub Works: AI Copilots for Scientific Tasks

The OpenPub platform uses a modular architecture built around specialized "copilots" that focus on specific open science tasks. The researchers' case study focused on the Reproducibility Copilot, which helps authors make their work more reproducible and guides readers through reproducing published studies.

When analyzing a scientific paper, the Reproducibility Copilot first generates a structured Jupyter Notebook that organizes the reproduction process around the paper's key figures, tables, and results. This notebook serves as a scaffold for readers, clearly indicating what information and code are needed at each step.

The system then applies four specialized modules to identify barriers to reproduction:

Hyperparameter Checker: Identifies missing or ambiguous values for critical parameters that control experiments

Dataset Checker: Verifies the presence of direct links to datasets and flags missing or inaccessible data

Code Checker: Detects missing code snippets essential for replicating experiments

Documentation Checker: Assesses whether code comments and structure are clear enough for others to understand

Each module uses GPT-4o, a large language model, with a two-step prompt strategy. The first step generates candidate issues (like potentially missing hyperparameters), while the second step refines this list to focus on genuine problems and provides specific recommendations.

"Given the non-deterministic nature of GPT-4o, these checks were performed five times, and another prompt was used with GPT-4o to consolidate these results by taking the union of the final lists produced," the researchers explain.

The system then annotates the manuscript PDF with targeted highlights and margin comments, and inserts feedback within code files to guide authors on what information needs to be added or clarified.

Dramatic Time Savings: From 30+ Hours to Just One

To demonstrate the practical impact of their system, the researchers selected a paper previously analyzed in a reproducibility study by Gundersen et al. This paper, which introduced a new machine learning method for clustering, had taken researchers 33 hours to reproduce in the original study.

Using the OpenPub system, two test users—one with a Ph.D. in data science and another with less experience (a computer science student)—were able to reproduce the same paper in about one hour each. This represents a time reduction of more than 95%.

"These tests were carried out under the assumption that the author is fully compliant with the recommendations generated by our tool," the researchers note, meaning they first played the role of authors, modifying code and documentation according to the system's suggestions before having test users attempt reproduction.

For the test paper, working with the system produced the data for each experiment and a notebook containing functions to load each file in the right format, the specific hyperparameter values for each experiment, and explanations on how to use the paper's methods and analyze results.

"These, together, were the missing pieces that made the reproduction of the paper take more than 30 hours in the study by Gundersen et al.," the researchers write.

Beyond Basic Reproduction: Comprehensive Coverage

The researchers also evaluated how comprehensively their system could identify and scaffold the experimental content needed for reproduction. They compared the Jupyter Notebook generated by their copilot with one manually created for teaching purposes, focusing on an Earth Sciences study about coral proxy signals.

The results showed that OpenPub provided wider coverage of figures and tables than the manually created educational notebooks. It captured not only the core figures and tables highlighted in the educational notebooks but also additional elements essential for full reproduction.

"This comprehensive coverage demonstrates that such an AI system does not merely replicate what educators consider useful for instruction: it successfully identifies and scaffolds the full scope of reproducible content in a research paper," the researchers note.

Challenges in AI-Enhanced Reproducibility

Despite the promising results, the researchers identified several challenges that need to be addressed in future work:

Scientific Understanding

The current system focuses on what the researchers call "rote reproducibility"—regenerating figures and tables using the same code and data. This differs from "scientific reproducibility," which involves reconstructing the reasoning and conclusions behind the research.

"Achieving complete scientific reproducibility is substantially more difficult as it requires access to the logic, rationale, and underlying assumptions that may not be explicitly stated in a paper," the researchers acknowledge.

User Adaptation

Different readers have different levels of scientific background, making it challenging to provide guidance that works for everyone. What seems obvious to an expert might be confusing to a student or newcomer.

"In future work, we plan to automatically construct semantic representations of the author analyses, enabling AI copilots to generate reader-specific guidance," the researchers propose.

Data Preparation and Diagnostic Analysis

When input data aren't properly structured or essential preprocessing steps are missing, readers can spend significant time troubleshooting these issues. It's also difficult for AI systems to detect subtle problems in data formatting.

The researchers suggest generating diagnostic code to validate whether data meet the requirements specified by authors and detecting major inconsistencies between reproduced results and those reported in the paper.

Claim Identification

Scientific papers contain various types of claims—some clearly marked in tables and figures, others embedded in text. Identifying which claims warrant reproduction and verifying that supporting data and code are present remains challenging.

Code Generation

While modern language models can generate code to fill gaps in reproduction workflows, the researchers decided not to rely on this capability in their current study to avoid potential errors. They plan to reconsider this approach as AI code generation improves.

A New Era for Scientific Communication?

The OpenPub system represents a significant step toward addressing the reproducibility crisis in science. By reducing the time and effort required to reproduce published studies, it could help make reproducibility a standard part of scientific practice rather than an exceptional achievement.

"The broader implication of our findings is that reproducibility, often seen as a post-publication burden, can instead be integrated as a dynamic part of the scientific workflow," the researchers conclude.

This approach aligns with growing demands from funding agencies like the National Science Foundation and publishers like the American Geophysical Union, which increasingly require researchers to share data, code, and other materials needed to reproduce their work.

As AI assistants become more sophisticated, they could transform how scientific knowledge is communicated, verified, and built upon—potentially accelerating the pace of discovery while strengthening the reliability of published findings.

The modular copilot architecture of OpenPub also provides a foundation for extending AI assistance to other open science objectives beyond reproducibility, suggesting that AI could play an increasingly important role in scientific communication and verification in the coming years.

What This Means for Scientists and Publishers

For individual scientists, tools like OpenPub could significantly reduce the overhead of making their work reproducible. Rather than spending extra hours documenting code and data, they could rely on AI assistants to identify what information needs to be included and how best to present it.

For readers and reviewers, these tools could transform the experience of engaging with scientific literature. Instead of struggling to decipher methods and reproduce results, they could follow AI-generated guides that walk them through the process step by step.

And for publishers and funding agencies, AI-powered reproducibility checks could become part of the submission and review process, ensuring that published papers meet basic standards for transparency and verifiability.

As one test user in the study noted, "Understanding a paper often requires as much effort as reproducing the study." By addressing both challenges simultaneously, AI assistants like OpenPub could make scientific literature more accessible and useful to a wider audience—potentially accelerating the pace of scientific progress.